In the increasingly fierce competition in the field of artificial intelligence, Google has recently announced the launch of the Gemini2.0 Flash Thinking model. This multimodal reasoning model boasts rapid and transparent processing capabilities, capable of tackling complex issues. Google CEO Sundar Pichai said on the social media platform X that "this is the deepest model we have ever developed."

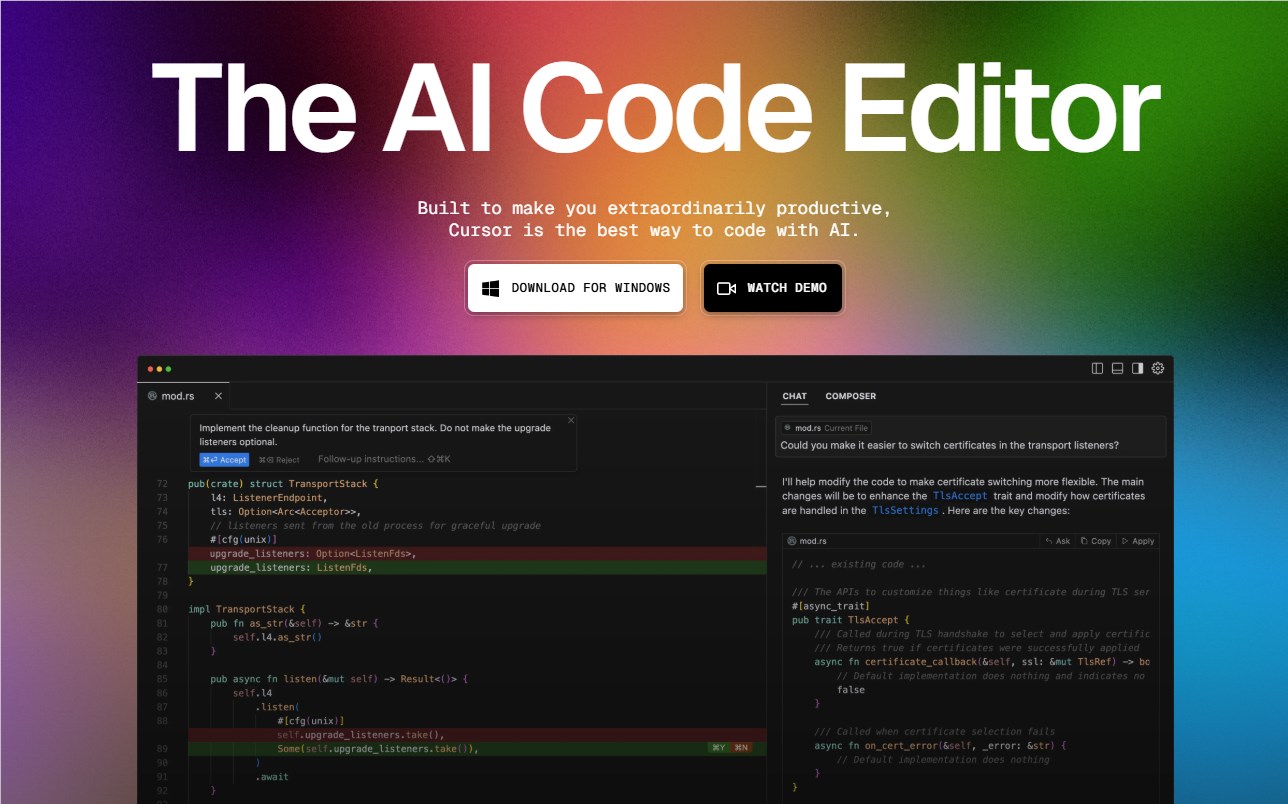

According to the developer documentation, the Gemini2 Flash Thinking model is more powerful in reasoning than the basic Gemini2.0 Flash model. The new model supports 32,000 input tokens (about 50 to 60 pages of text) and can produce responses up to 8,000 tokens. Google's AI Studio sidebar indicates that this model is particularly suitable for "multimodal understanding, reasoning," and "coding."

The detailed information about the training process, architecture, licensing, and costs of the model has not been disclosed yet, but the Google AI Studio shows that the cost of using the model per token is currently zero.

A significant feature of Gemini2.0 is that it allows users to access the model's step-by-step reasoning process through a dropdown menu, a feature not available in competing models like OpenAI's o1 and o1mini. This transparent reasoning approach enables users to clearly understand how the model reaches conclusions, effectively addressing the issue of AI being perceived as a "black box."

In some simple tests, Gemini2.0 can quickly (within one to three seconds) correctly answer complex questions, such as calculating the number of occurrences of the letter "R" in the word "strawberry." In another test, the model systematically compares two decimal numbers (9.9 and 9.11) by analyzing the whole number and decimal places.

The independent analysis agency LM Arena has rated the Gemini2.0 Flash Thinking model as the best-performing model in all large language model categories.

Additionally, the Gemini2.0 Flash Thinking model has native image upload and analysis capabilities. Compared to OpenAI's o1, which was originally a text model and later extended to image and file analysis, both can currently only return text outputs.

Although the multimodal capabilities of the Gemini2.0 Flash Thinking model expand its potential application scenarios, developers should note that the model currently does not support integration with Google Search or with other Google applications and external tools. Developers can experiment with this model through Google AI Studio and Vertex AI.

In the increasingly competitive AI market, the Gemini2.0 Flash Thinking model may mark the new era of problem-solving models. With its ability to handle multiple data types, provide visual reasoning, and operate at a large scale, it becomes an important competitor in the reasoning AI market, challenging the OpenAI o1 series and other models.

Highlights:

🌟 The Gemini2.0 Flash Thinking model has strong reasoning capabilities, supporting 32,000 input tokens and 8,000 output tokens.

💡 The model provides step-by-step reasoning through a dropdown menu, enhancing transparency and addressing the "black box" issue of AI.

🖼️ It has native image upload and analysis capabilities, expanding multimodal application scenarios.

暂无评论